Like many IT departments, electricity is often an unsung hero in the computing realm. Those lightning-fast servers with super-smart CPUs, heaps of memory, and acres of storage get all the fame of a star quarterback, but they couldn’t do their jobs without the underlying power behind them. A server without electricity is just a heap of metal parts, useless for anything other than anchoring boats.

What kind of electricity does it take to keep a data center going? Let’s take a look in layman’s words. Don’t worry; this won’t be a boring analysis of terms better suited for an engineering or a science class, but rather a subjective analysis with real-world examples.

The basics

Amps, volts, ohms, and watts

Electricity is measured in amps, volts, ohms, and watts. Figuring out the difference between them can be a challenge if you’re not an electrician or into DIY home repair, but it’s really quite easy.

- Amps (amperes): The actual electrical current, or moving electrons, coming through the power lines. Many devices are rated based on the amps they use or can support (such as outlets or circuit breakers). A wall outlet might be rated for 15 amps and a workstation plugged into it may consume 2.5 amps, leaving a comfortable margin.

- Volts: Often referred to as the “pressure” pushing amps through a designated path. A server may have an electric plug rated for 110 or 120 volts (but can run at differing levels of voltage). A higher number is better since this achieves better electrical efficiency. Many of the household devices we use every day run at 120 volts. One AA battery may run at 1.5 volts.

- Ohms: The resistance that slows electrical current, causing impediment (perhaps due to the size of the path). Defined as “the amount of resistance that allows one ampere of current to flow when one volt of potential is applied to it.”

- Watts: The actual electrical power at hand that is used by a device such as a server or network switch. A heavily loaded server might use 450-500 watts or more.

The following three formulas can be useful in quantifying these terms (note that fixed voltage is required for these to be reliable).

Amps = watts / volts

This means the electrical current is equal to the power used divided by the “pressure” at hand.

In the case of the workstation I mentioned above, it uses 300 watts on a 120 volt outlet, so therefore draws 2.5 amps.

Volts = amps x ohms

This means the electrical “pressure” is equal to the current times the resistance.

For example, .25 amps being resisted by 12 ohms would result in 3 volts. I realize this is a bit ambiguous, but the goal here is to focus more on volts than ohms, at least when it comes to data center power.

Watts = volts x amps

This means the amount of power is equal to the pressure times the current.

For example, a server hooked into a 120 volt outlet running at 3.2 amps would consume 384 watts.

Watts are often measured in kilowatts (kWh) or even megawatts (MW) in the case of huge data centers. When the utility company sends your electric bill, it probably lists how many kWh you consumed last month.

These formulas are important since server power is often referred to in watts or amps and capacity in volts or amps. You need to know how to measure or plan usage based on these figures.

Let’s say a server rack is installed with power strips that offer a 20 amp capacity, and it’s up to you to figure out how many servers you can safely plug into that strip. If these systems use 208 volts and you estimate that at maximum load they consume 400 watts of electricity, you can calculate the amps involved by dividing 400 by 208.

Do you see how 208 volts makes things more efficient? The servers use 1.92 amps each (this can vary based on load, so it’s not set in stone), whereas if they were at 120 volts, they’d use 3.33 amps. This means they’ll draw less current.

Since the power strip can accommodate the use of 20 amps, hypothetically you could hook 10 of these servers into it, but you never want to max out like that since it could overload the power strip. I recommend leaving at least 20% or more of the amps unused (in fact, electrical codes state continuous load circuits can only be loaded to 90%), and so I would consider it safe to plug no more than eight servers into this strip.

Your job here differs slightly if the server rack is powered and comes with a rating in kilowatts; generally 4-5 kWh seems to be the standard for a server rack, though this can increase depending on the data center involved. If that’s the case, you could plug 10-12 servers in, but once more you’ll want to operate from a standpoint of caution and only utilize seven or eight.

AC and DC power

Alternating current (AC) and direct current (DC) are two types of current (or voltage) used by electricity.

AC current generally operates at 120 or 240 volts and is considered “traditional” power, which is used by grids around the world. It can be transformed into different voltages quite easily. AC current “changes direction” frequently, up to 60 times per second (also known as Hertz or Hz).

DC current is a bit harder to quantify. Unlike AC, it remains constant and doesn’t change direction. It’s commonly associated with batteries or stored power such as that in a UPS. A car battery might run at 12 volts for instance. Some electrical systems such as telecommunications equipment run directly on DC power. A laptop will work off DC power if on battery.

Any given data center is quite likely going to use AC and DC power, since AC is often converted to DC or vice versa. Inbound AC power is predominant in many data centers today, but the switch is slowly being made to DC power.

Why the change? DC is more powerful over far-reaching distances, offers lower costs, and is often more reliable. While not always more efficient than AC power (DC needs to be converted to a lower power level for some consumers such as residences), it offers an advantage when it comes to renewable energy since less AC-DC conversion is needed.

So how is a data center powered?

Now that we’ve gone over how to measure and calculate electrical needs and the types of power available, let’s look at a hypothetical data center I’ll call TDC (short for The Data Center).

All the power in TDC comes from a large UPS running on AC current. The UPS can supply 80 kilowatts at 480 volts. As the UPS draws AC power it’s converting some of that energy to DC power in the event of a power outage.

The UPS provides power to several circuit breakers in TDC. These breakers run at 30 amps and 208 volts — this means they can handle 6240 watts, though should be subjected to no more than 4992 watts to stay within that “80% or lower” safety zone. If you expect your servers to run at 600 watts max (say in extreme conditions), then seven or eight is your limit.

Another way to look at it is if you’ve got servers running at 3.3 amps, you wouldn’t want more than seven plugged into each breaker.

So, the servers are plugged in to rack-mounted power strips, which then hook into these circuit breakers. The data center isn’t powering on just the servers — there are hefty electrical requirements for the foundation of the data center as well:

- Air handlers/cooling/heating/ventilation

- Lighting

- UPS systems and generators

- Fire suppression systems

- Alarm systems

How much power this data center consumes depends on many factors — the primary ones are size, number of servers, air control strategies, and how many other devices are hooked up in TDC.

However, for the sake of argument, let’s say there are 40 critical servers drawing 400 watts, which totals 16000 watts, or 16 kilowatts. Since the UPS can supply five times that amount, this leaves plenty of breathing room for the other elements in the data center.

When a power outage hits, the UPS employs a power inverter to convert its stored DC power to AC current so all components in TDC can be kept running. The good news is that many things like chillers or air conditioners can be set to reduce power or turn off entirely if need be in order to preserve the more important systems during this scenario.

Of course, how long a UPS can run depends on how long the power is off. Eventually, given enough time, every UPS out there will grind down to a halt if it’s not fed fresh AC current. However, with luck, a good UPS can keep a data center afloat long enough for the main grid to be repaired and brought back up, or at least give IT staff enough time to fail over to a disaster recovery site. You do have one, right?

Dimming the lights

This is just scratching the surface of electricity — how it works and ways it can be used, measured, and charted. It’s a critical topic for IT professionals to understand, especially in this day and age of power shortages and green energy initiatives to reduce power consumption. According to Wikipedia, as of two years ago “the cost of power for the data center is expected to exceed the cost of the original capital investment.”

With that in mind, I’ll close with a final formula: PUE.

PUE stands for Power Usage Effectiveness and represents the total data center power divided by the power consumed by IT equipment. This determines how efficient a data center is running, and again according to Wikipedia: “The average data center in the US has a PUE of 2.0 meaning that the facility uses one watt of overhead power for every watt delivered to IT equipment. State-of-the-art data center energy efficiency is estimated to be roughly 1.2.”

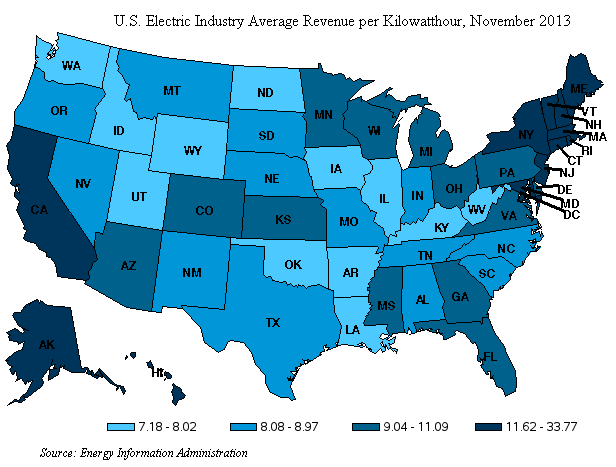

Figure A illustrates the average per-state revenue brought in by the electric companies in the US based on cents per kWh (as of November 2013).

Figure A

If your data center runs in the Northeast, California, Hawaii, or Alaska, you might be paying a significant amount of money for electricity, so it’s critical to get your PUE as low as possible. Even if your data center is in Nevada or Oregon and enjoys cheaper electricity rates, nothing is guaranteed long-term.

Thinking about how your data center consumes power and what you can do to make it work more efficiently is something you should start doing today.

Disclaimer: TechRepublic, ZDNet, and Tech Pro Research are CBS Interactive properties.