It’s undoubtedly true that “software companies [are taking] over large swathes of the economy,” just as Andreessen Horowitz co-founder Marc Andreessen once predicted. Books became easier to buy (Amazon). Movies can stream in your home (Netflix). Heck, eventually we would take rides in the cars (Uber) of strangers and stay in their homes (Airbnb). But for all the gushing of digital transformation, such innovations are arguably “soft” compared with building something “hard” like a high-performance computing (HPC) application.

SEE: Hiring Kit: Database engineer (TechRepublic Premium)

Yet we’ve seen relatively few such applications.

That may be changing. As a new study from hybrid HPC cloud startup Rescale illustrates, we’re starting to see unprecedented innovation cycles in a wide range of “hard” industries, from rockets and supersonic transport to nuclear fusion and pharmaceuticals. The report’s press release highlights key findings and pinpoints a key reason for this rise in HPC applications: the cloud. As noted, “[C]loud computing will reshape the world of physical things as profoundly as cloud has disrupted the digital computing world over the past two decades.”

I’ve covered the role of the cloud in unlocking the hard sciences, but it’s worth revisiting the topic in lieu of this new survey data.

A very cloudy friend

Years ago, then general manager of AWS’ data science team, Matt Wood, explained how cloud elasticity enables businesses (or scientists) to scale their infrastructure to help them ask hard questions:

“Those that go out and buy expensive infrastructure find that the problem scope and domain shift really quickly. By the time they get around to answering the original question, the business has moved on,” Wood said. “You need an environment that is flexible and allows you to quickly respond to changing big data requirements. Your resource mix is continually evolving; if you buy infrastructure it’s almost immediately irrelevant to your business because it’s frozen in time. It’s solving a problem you may not have or care about any more.”

Now couple that underlying infrastructure with something like Rescale, which sits atop an enterprise’s cloud of choice to power HPC-as-a-Service. Rescale’s software platform enables scientists and engineers to get access to massive pools of the latest chip architectures that boast the fastest interconnects and memory to run enormous workloads over and over that simulate the real world.

For example, Boom Supersonic designed and built a prototype of a supersonic transport on Rescale in a matter of years and then sold 50 of them to United Airlines. And, perhaps best of all for Boom co-founder and CTO Josh Krall, Rescale’s platform gave Boom “resources comparable to building a large on premise HPC center” all while enjoying “minimal capital spending and resources overhead.”

In the 1960s, it took more than two decades of design and wind tunnel testing for the U.S. just to get one supersonic transport prototype that never flew commercially. Now, through the cloud, private companies can now build rockets to send to Mars.

How are other companies using the cloud to put HPC to work?

More and faster

To gather data for its report, Rescale conducted a broad survey of more than 230 practitioners of compute-driven engineering and found that the dawning era of unconstrained compute showed:

- Improved access to computational resources is tied to improved project success, e.g. achieving budget and timeline goals;

- 83% use simulation throughout the product lifecycle when they have easy access to computational resources;

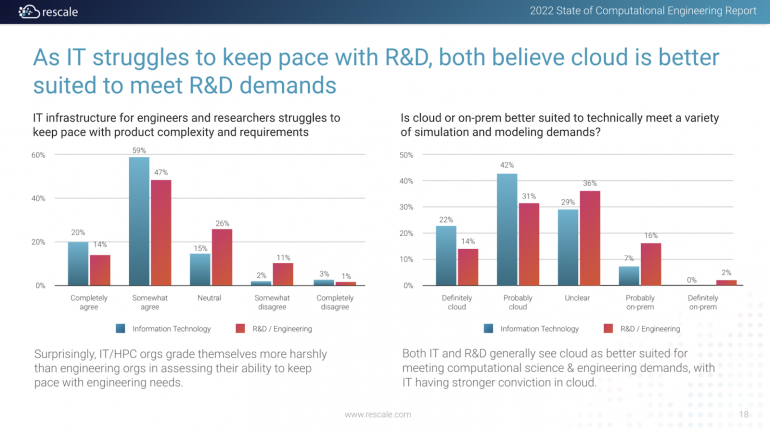

- 61% said IT infrastructure struggles to keep pace with product complexity and requirements;

- 78% utilize cloud-based HPC with over 50% using it consistently for engineering and science workloads.

Despite challenges, both R&D and IT see cloud helping to improve use of HPC (Figure A).

Figure A

As Gartner vice president and analyst Chirag Dekate has said, “HPC applications are fundamentally different from enterprise applications in their architecture, scale, and complexity,” in part because “a single HPC application [can] span hundreds [to] thousands of [processor] cores.” Making things even more complicated, he continued, “HPC architectures [also] necessitate dense coupling across systems—[a] specialized infrastructure stack has traditionally been challenging to leverage through cloud environments.” Fortunately, “[W]e are now at an inflection point where the value-capture potential from cloud-based HPC strategies will outpace traditional HPC value-capture models.”

More cloud, made more useful for HPC applications by things like Rescale. While few will complain about having streaming video in our homes, there’s potentially more to celebrate with the hard scientific breakthroughs that cloud-based HPC should bring.

Disclosure: I work for MongoDB, but the views expressed herein are mine.